PG S3 M. Sc. AI First internal examination, Machine Learning, September 2024

Solutions – Machine Learning

Section A

1. Stochastic Gradient Descent (SGD) is

an optimization algorithm used for training machine learning models,

particularly neural networks. It is a variant of gradient descent that updates

model parameters using only a single data point (or a small batch) at a time.

2. MTL

can be useful in many applications such as natural language processing,

computer vision, and healthcare, where multiple tasks are related or have some

commonalities. It is also useful when the data is limited, MTL can help to

improve the generalization performance of the model by leveraging the

information shared across tasks.

3. A regularized problem incorporates a

regularization term into the objective function to prevent overfitting and

improve model generalization. Regularization adds a penalty to the loss

function based on the complexity of the model parameters.

n under-constrained problem occurs when there are fewer constraints or

conditions than required to uniquely determine a solution. In optimization and

machine learning, this means there are more possible solutions than constraints

allow.

4. Bayesian

statistics is a branch of statistics that interprets probability that

an individual may possess about the occurrence of a particular event. It is

named after Thomas Bayes, an 18th-century mathematician.

Bayes' most notable contribution, Bayes'

Theorem, is the milestone of Bayesian statistics and provides

a mathematical formula for updating probabilities based on new evidence.

Section B

6. Supervised

learning is a fundamental type of machine learning where the algorithm learns

from a labeled dataset. This means that each training example in the dataset is

paired with an output label. The goal of supervised learning is to learn a

mapping from inputs to outputs that can be used to predict labels for new,

unseen data.

Unsupervised learning is a type of machine learning

where a model is trained using data that does not have labeled responses. The

goal is to discover hidden patterns or intrinsic structures within the input

data.

7. Backpropagation

is an algorithm used for training neural networks by updating weights to

minimize error through gradient calculation. It is a method for calculating the gradient of the

loss function with respect to each weight by the chain rule, allowing the model

to update weights and minimize the error.

Steps:

1.

Compute

activations for all neurons in the forward pass.

2.

Calculate

the error at the output layer.

3.

Compute

the gradient of the error with respect to weights using the chain rule.

4.

Update

the weights to minimize the error.

Parameter

Norm Penalty

Definition: Parameter norm penalty is a

technique used in regularization to prevent overfitting by adding a penalty

term to the loss function based on the magnitude of the model parameters.

Types of

Parameter Norm Penalty:

1.

L1 Norm Penalty (Lasso): Adds the sum of the absolute values of the weights to the

loss function.

2.

L2 Norm Penalty (Ridge): Adds the sum of the squares of the weights to the loss

function.

8. Dataset augmentation enhances model

training by increasing dataset size and variability through transformations

such as rotation, scaling, and color adjustments for images; synonym

replacement and random insertion for text; and noise addition or pitch shifting

for audio. For tabular data, techniques like SMOTE and random noise are used.

Image

Augmentation:

- Rotation: Rotating images by a certain

angle.

- Translation: Shifting images along the X or

Y axis.

- Scaling: Resizing images to different

scales.

- Flipping: Horizontally or vertically

flipping images.

- Cropping: Extracting a portion of the

image.

Audio Augmentation:

- Noise Addition: Adding background noise to

audio.

- Pitch Shifting: Changing the pitch of the

audio.

- Time Stretching: Altering the speed without

changing the pitch.

- Volume Adjustment: Increasing or decreasing the

audio volume.

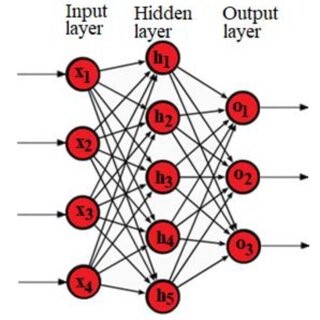

9. Deep Neural Network (DNN)

A Deep

Neural Network (DNN) consists of multiple layers of neurons (nodes) that learn

hierarchical features from data. Here’s a simplified diagram of a deep network

and its components:

Description

of Components

1.

Input Layer:

o Purpose: Receives the raw input data (e.g.,

pixel values in an image, features in tabular data).

o Example: If each input is a vector with 4

features, the input layer will have 4 nodes.

2.

Hidden Layers:

o Purpose: Extract and learn features from the

input data through various layers of neurons. Each hidden layer transforms the

data, enabling the network to learn complex representations.

o Layers: Can have multiple hidden layers

(e.g., 3 hidden layers in the example) with each layer consisting of multiple

neurons (e.g., H1, H2, ..., H9).

3.

Output Layer:

o Purpose: Produces the final output of the

network, which could be probabilities (for classification tasks) or continuous

values (for regression tasks).

o Example: For a classification problem with 3

classes, the output layer will have 3 nodes, each representing the probability

of a class.

Section C

10. Overfitting vs. Underfitting

Overfitting: The model learns the training data

too well, including noise and outliers. It performs very well on training data

but poorly on new, unseen data.

- Example: A model that memorizes the

training examples instead of finding general patterns.

Underfitting: The model is too simple to capture

the underlying patterns in the data. It performs poorly on both training and

test data.

- Example: A model with too few features

or too simple a structure that fails to capture the data's complexity.

Semi-supervised

learning is a type

of machine learning that combines a small amount of labeled data with a large

amount of unlabeled data during training. It leverages the labeled data to

guide the learning process, while the unlabeled data helps to improve the

model's performance and generalization.

11. Bias and variance are two sources of

error in machine learning models.

Bias refers to the error introduced by

approximating a real-world problem, which may be complex, with a simplified

model. High bias typically indicates that the model is too simplistic, leading

to underfitting where it fails to capture the underlying patterns of the data.

Variance, on the other hand, measures the

error introduced by the model’s sensitivity to fluctuations in the training

data. High variance often results from a model that is too flexible, capturing

noise from the training data and overfitting, which means it performs well on

training data but poorly on new, unseen data.

The bias-variance trade-off is the balance between bias and variance to minimize total prediction error. An optimal model achieves a compromise where both bias and variance are minimized, avoiding both underfitting and overfitting. This balance is crucial for building models that generalize well to new data, ensuring that they are neither too simplistic nor overly complex.

Comments

Post a Comment